Rongsheng Wang

Ph.D., The Chinese University of Hong Kong, Shenzhen (2025)

Ph.D., The Chinese University of Hong Kong, Shenzhen (2025)

I am Rongsheng Wang (王荣胜), currently pursuing my PhD at The Chinese University of Hong Kong, Shenzhen. My primary research interests lie in Large Language Models (LLMs) and Multimodal LLMs (MLLMs). I love open source and sharing useful knowledge with everyone. I have been featured in China-Ranking and recognized as an outstanding individual developer on GitHub in China. I actively contribute to the open-source community on 👾GitHub

Education

-

The Chinese University of Hong Kong, Shenzhen

Ph.D. in Computational Biology and Health Informatics Sep. 2025 - Now

-

Macao Polytechnic University

M.S. in Big Data and Internet of Things Sep. 2022 - Jul. 2024

-

Henan Polytechnic University

B.S. in Computer Science (AI) Sep. 2018 - Jul. 2022

Honors & Awards

- 🥈Second Prize of Yidu Healthcare Hackathon 2025

- 🥇First Prize of LIC 2025 2025

- 🎫Kaggle Competitions Expert 2025

- 🥇Gold Medal of Kaggle AI Mathematical Olympiad - Progress Prize 2 2025

- 🎫Outstanding Award of JingDong Health - Global AI Innovation Competition 2024

- 🥇First Prize of Baidu PaddlePaddle AGI Hackathon 2024

- 🥉Third Prize of DiMTAIC (Organized by JingDong Health) 2023

- 🥉Third Prize of Baichuan Intelligence and Amazon Cloud AGI Hackathon 2023

- 🥈Silver Medal of Kaggle RSNA Screening Mammography Cancer Detection 2023

- 🎫Outstanding Award of IEEE UV 2022 Object Detection Challenge 2022

Experience

-

CUHK (SZ)

Research Assistant (Supervisor is Benyou Wang) Sep. 2024 - Sep. 2025

-

Qiyuan.Tech

CTO Oct. 2023 - Now

-

Service

- Reviewer of Journal of Biomedical and Health Informatics (JBHI)

- Reviewer of IEEE Transactions on Medical Imaging (TMI)

- Reviewer of ICME 2025

News

Selected Publications

(view all )

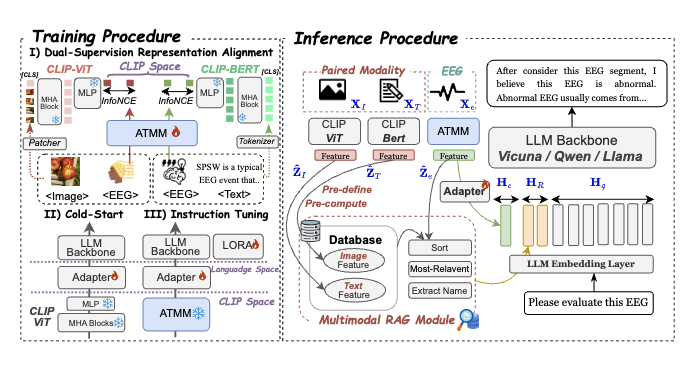

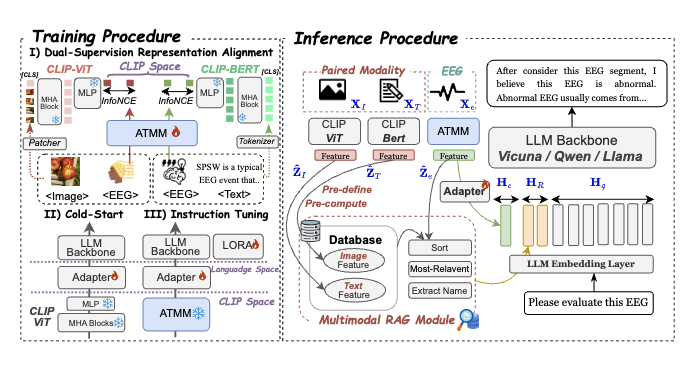

Towards a Conversational EEG Foundation Model Aligned to Textual and Visual Modalities

Ziyi Zeng, Zhenyang Cai, Yixi Cai, Xidong Wang, Junying Chen, Rongsheng Wang, Yipeng Liu, Siqi Cai, Benyou Wang†, Zhiguo Zhang, Haizhou Li(† corresponding author)

arXiv 2025 Conference

We introduce WaveMind, a multimodal large language model that unifies EEG and paired modalities in a shared semantic space for generalized, conversational brain-signal interpretation.

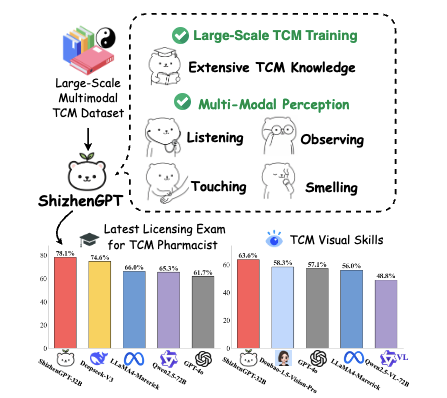

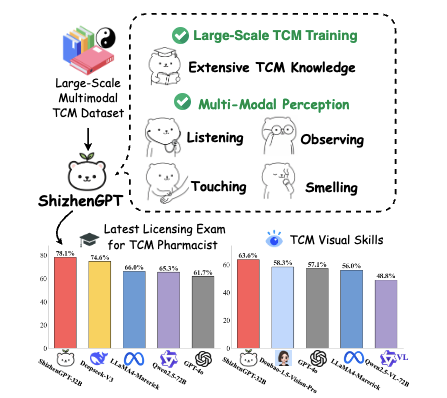

Towards Multimodal LLMs for Traditional Chinese Medicine

Junying Chen, Zhenyang Cai, Zhiheng Liu, Yunjin Yang, Rongsheng Wang, Qingying Xiao, Xiangyi Feng, Zhan Su, Jing Guo, Xiang Wan, Guangjun Yu, Haizhou Li, Benyou Wang†(† corresponding author)

arXiv 2025 Conference

We introduce ShizhenGPT, the first multimodal LLM tailored for Traditional Chinese Medicine, designed to overcome data scarcity and enable holistic perception across text, images, audio, and physiological signals for advanced TCM diagnosis and reasoning.

A Large-Scale Diversified Dataset for Audio-Driven Talking Head Synthesis

Shunian Chen, Hejin Huang, Yexin Liu, Zihan Ye, Pengcheng Chen, Chenghao Zhu, Michael Guan, Rongsheng Wang, Junying Chen, Guanbin Li, Ser-Nam Lim, Harry Yang, Benyou Wang†(† corresponding author)

arXiv 2025 Conference

We introduce TalkVid, a large-scale, high-quality, and demographically diverse video dataset with an accompanying benchmark that enables more robust, fair, and generalizable audio-driven talking head synthesis.

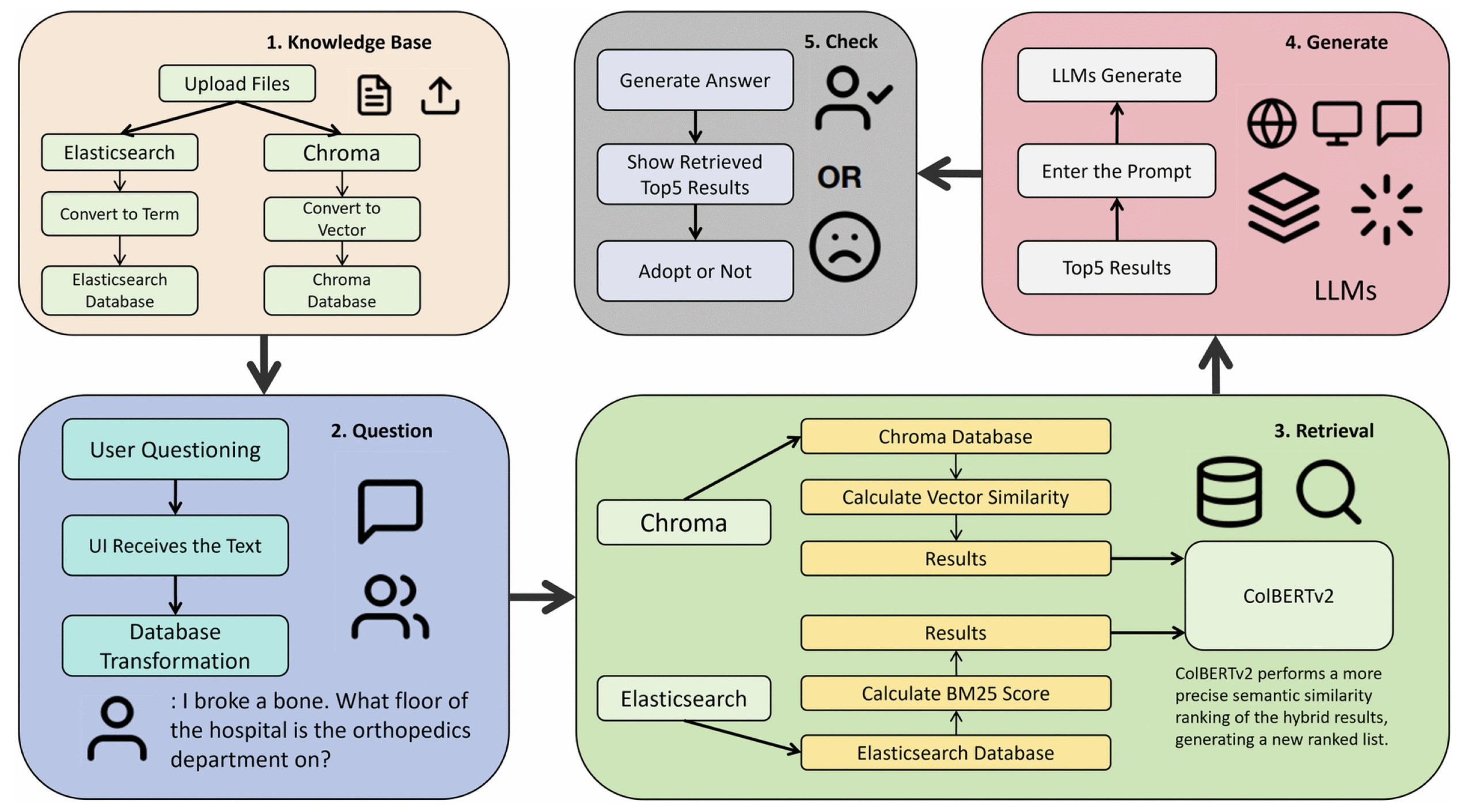

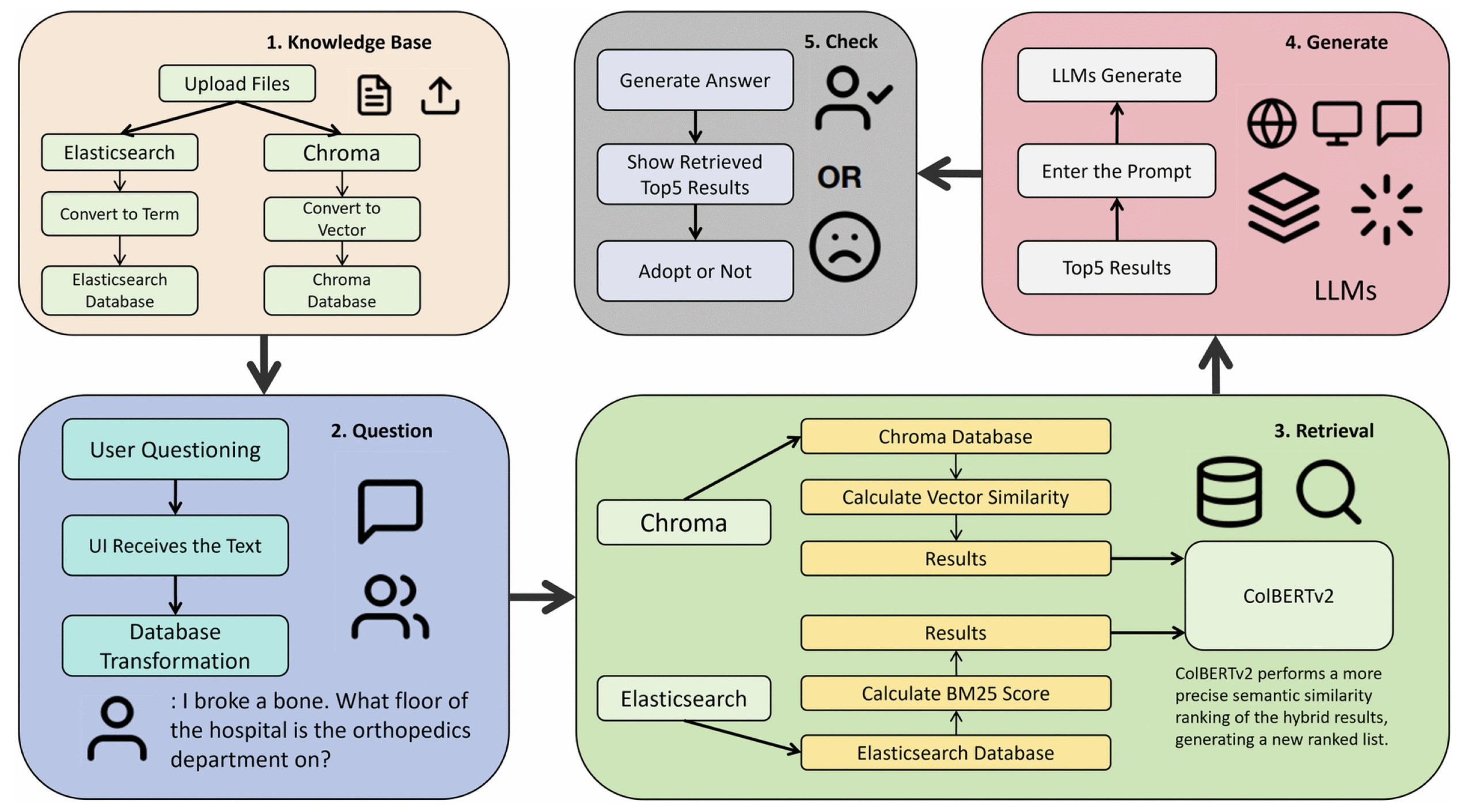

Dual Retrieving and Ranking Medical Large Language Model with Retrieval Augmented Generation

Qimin Yang, Huan Zuo, Runqi Su, Hanyinghong Su, Tangyi Zeng, Huimei Zhou, Rongsheng Wang, Jiexin Chen, Yijun Lin, Zhiyi Chen, Tao Tan†(† corresponding author)

Scientific Reports 2025 Journal

We proposes a two-step retrieval-augmented generation framework combining embedding search and Elasticsearch with ColBERTv2 ranking, achieving a 10% accuracy boost in complex medical queries while addressing real-time deployment challenges.

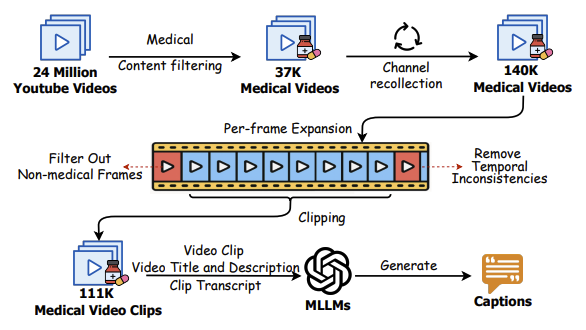

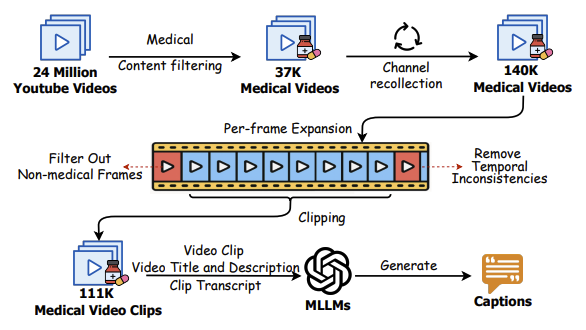

Unlocking Medical Video Generation by Scaling Granularly-annotated Medical Videos

Rongsheng Wang, Junying Chen, Ke Ji, Zhenyang Cai, Shunian Chen, Yunjin Yang, Benyou Wang†(† corresponding author)

arXiv 2025 Conference

We introduce MedVideoCap-55K, the first large-scale, diverse, and caption-rich dataset designed for medical video generation. Comprising over 55,000 curated clips from real-world clinical scenarios, it addresses the critical need for both visual fidelity and medical accuracy in applications such as training, education, and simulation.

RedNote

RedNote

Baidu PPDE

Baidu PPDE

Kaggle Competition Master

Kaggle Competition Master